Richard Bluff is the Production Visual Effects Supervisor, for Disney+’s The Mandalorian. We spoke to Richard in March 2020 about season one of the hit Star Wars show and we recently caught up with him again to discuss the advances in ILM StageCraft 2.0 that were used for the new LED stage built for the second season. The new stage is not only bigger, but the team pioneered new techniques that could have a profound effect on how such stages are conceived moving forward.

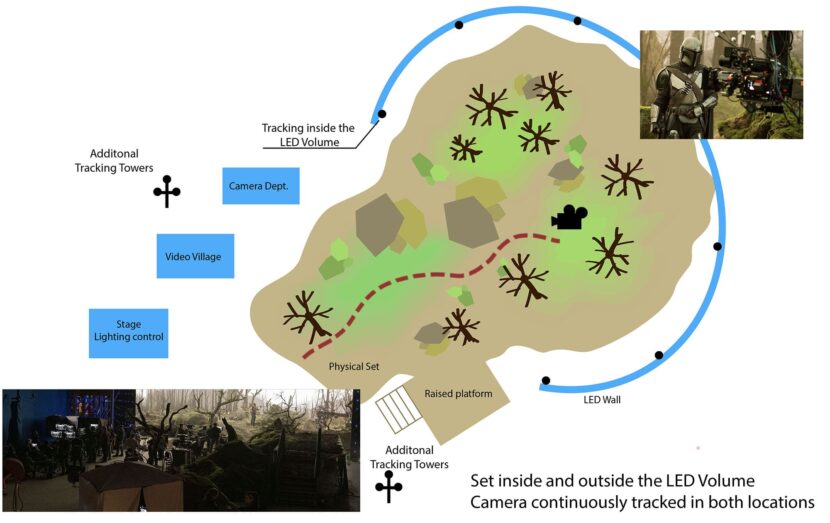

“When we were doing season one, we spent months and months discussing how big that stage should be,” he explained. “With the success we were having, we felt as though this season we could be less conservative and we could try different things, different shapes. So, one of the things that we wanted to do was, was to have a different shape and bigger stage such that we can have a longer walk and talks.” Naturally as the stage grew in hardware size it also dramatically increasing the size of the technical task in driving even more visible pixels. For the 2nd season, ILM moved to an in-house real-time renderer, dubbed Helios and it also broke with the notion of how the stage could be filmed. For many filmmakers, the new LED stages are thought of as a circular stage or volume within which to film with in-camera 3D real-time backgrounds captured in camera. While this remains true, ILM and the production team also extended the concept to tracking the camera inside and outside the volume.

For key scenes, such as in episode 5 of season 2, the production extended a practical set out of the volume into the sound stage beyond it. In the episode The Jedi, written and directed by Dave Filoni, the Mandalorian tracks down Ahsoka Tano (Rosario Dawson), outside the city of Calodan on the deforested planet of Corvus. The entire episode is set outdoors, but filming inside on the volume allowed Filoni to control the lighting. When Mando is walking to first find Ahsoka, he walks through a burnt forest until he comes upon an open clearing. Unlike season one, the physical sets for this long continuous walk were built starting outside the volume. The set design was done so as to hide as much of the back (technical) side of the LED walls as possible, but the motion capture volume was extended into the normally technical areas of the stage so that the camera could be tracked moving continuously from outside the LED walls into the middle of the volume. Given the nature of the lens, any sections of the LED stage seen at the back of this long dolly shot, would be visually correct, but the lighting on the actors outside the LED volume was all traditional lighting. In-camera, the movement, and lighting appear seamless as the camera moves in one take from a traditional sound stage into a LED capture volume.

This is significant as it points to how ILM is innovating to produce multiple volumes and yet one integrated solution. ILM is opening the door to multiple connected volumes, multiple vertical volumes. One can image new and vast shots that travel from different rooms or spaces, with dynamic LED volumes via connected practical corridors, trenches or openings. This dynamic real-time world facilitated by possibly moving sets allowing for action sequences that could never before be designed for sound stage in-camera filming.

ILM has developed a very strong relationship with all of the other departments such as production design, art department, or standby props (set decorations). “We scan what they source and what they build or paint,” points out Bluff. All props and on-stage elements are brought into UE4, which is used by all the departments in pre-viz, such as the virtual art department which also leverages VR for scouting and heads of department reviews. The final content can be created in Unreal, Houdini, 3DS Max or any number of other DCC packages, and then, for the shoot days, all of it gets seamlessly read into ILM’s Helios real-time renderer for accurate display on the LED walls. Collaboration is central to the StageCraft ILM pipeline.

Boba Fett’s ship, for example, flies vertically and lands horizontally but the audience has never seen how this managed to work. In The Mandalorian the interior of the ship is shown to be a type of gimbal, allowing the ship to rotate while the crew area remains seated correctly. In the behind-the-scenes Disney Gallery series, Jon Favreau commented that “Nobody’s ever seen what goes on inside that ship when it rotates. We’ve only seen the outside, so that was one of the inspirations for doing it on the stage, taking advantage of what the stage can do.”

When this was being designed the creative team all reviewed the visualizations from ILM as it was always going to require complex gaffing and rigging, and possibly a very complex practical ship gimbal inside the LED volume. Prior to final filming, there was a creative walkthrough where every department could comment. At this point led by comments from Jon Favreau, the interior benches were all modified and a set of physical benches moved to become digital content. “He decides that there are too many benches for the blocking that is needed”, recalls Bluff. With the complex interior rotation scene possibly being shot within the next 24 hours, the LED Capture Volume ‘brain bar’, ILM, and all the other departments solved the props, the lighting inside the ship, re-built the virtual stage environment. “That’s really where the flexibility and power of the new software that ILM had developed for season two was evident, – the ability to grab hold of any individual piece of geometry in this incredibly complex virtual environment and simply turn it off, working collaboratively with all the other departments interactively,” Bluff proudly points out.

The ILM team have developed various technical rules of thumb or guidelines for filming, such as not having any actor filmed standing with 8 feet of the LED wall, they also don’t shoot down the wall at an oblique or glancing angle, “we usually shoot roughly somewhere in the middle of the LED volume,” explains Bluff. In-camera testing and experience have proven vital. For example, the team was concerned about what a reflecting body of water in the stage might show in terms of the moire pattern from the LEDs, some people guessed the effect might be magnified. In the same episode 5, the production design called for a pond or pool beside a key fight. In episode 5, Ahsoka and the Mandalorian storm the city, killing all the guards and freeing the citizens. In the climactic fight, Ahsoka engages in a duel with Imperial Magistrate Morgan Elsbeth, ruler of the city. “We had a pool with small ripples on the water and you could walk into the stage and be 50 feet away from walking into the volume, and you could see with your naked eye the moire patterns. It was clear to your eye that the effect was being magnified by the ripples on the water,” he explains. “And instantly you thought that this is never going to work”. Yet when the camera department set up the master Arri camera, in the same place, it just wasn’t visible on the camera output. Similarly, there have been other complex setups where the camera picked up the moire pattern when the crew could not see it standing in the same spot. “It can be so strange, and that was one of the reasons why in season one, we decided that it was too risky to shoot a scene with water in the volume, but we could be more flexible in Season 2.”

In season 2 Dave Filoni really wanted the dramatic dual to be mirrored in water. “Dave had written it into his episode, and we found that if there is ever a condition within the physical (LED) space that can bring the lighting and wrap it further around the actors, it cements them in that world”. For example, if the ground is reflective due to the floor covering, or sheen from water on the ground after rain, the team found that this immersed the actor in wonderfully believable ways. The lighting in the Magistrate’s courtyard “was just one of those sets that really made the technology sing.” says Bluff.

Another complex aspect is the use of smoke or atmosphere inside the volume. For season one, “we didn’t have the flexibility in the toolset to be able to add participating media in content and have the real-time control that we wanted,” recalls Bluff. “From the very, from the beginning, the rule of thumb was that we should avoid it, and we should add in post, if necessary.” Once the team moved to season two, the series head creatives Jon Favreau and Dave Filoni wanted to push the boundaries of the technology and add participating media. This was because we all recognize scenes from season one when we felt shots would have benefited from having it, even when we were in the volume,” says Bluff. In using StageCraft 2.0 with ILM’s Helios render engine the team introduced participating media that the supervisors could dial it in and out as need in real-time. They achieved this by working very closely with the director of photography, the gaffer and with the special effects (practical) team.

Smoke, fog or any participating media is very hard to manage as there needs to be no obvious density or light interactions changes as the transition is made from smoke in the volume to that effect rendered on the LED walls. The team developed a system of recording the atmospheric levels of the smoke that was pumped into the set. “Then we would dial that into what was being displayed on the screen to make sure that the handoff from physical to digital worked. As soon as the physical smoke dipped below a certain level, we would pump it back up again to that threshold that we all liked, to make sure it matched”. Not only did the ILM team not want to show an artificial divide between the two different atmosphere levels, but they needed to be able to maintain a reliable level and a repeatable level should the production need to return for more shots or pickups later. While it is one thing to just get something believable in the moment, ILM prides itself on documenting and being able to dial back earlier setups reliably and quickly.

By expanding what the LED capture volume is, how it can be filmed and how it can be conceived to be used in future projects the ILM team is expanding rapidly the fundamentals of sound stage work. Bluff is excited by what this opens up. Instead of seeing even the physical size of an LED stage as an absolute limit, the ILM team is innovating in ways that open up huge opportunities for all filmmakers. “What we thought were limitations were really interesting and are being solved in creative ways, because everybody is contributing. From the writers to the directors, and DPs to the heads of department and crew, – everyone has a much better understanding of the technology now,” he comments. “The gloves are off, and now we are just asking: what else can we do? How can we use this technology in new and interesting ways to tell more compelling stories?”