OpenAI GPT-n models: Shortcomings & Advantages in 2024

Generative Pre-trained Transformers (GPT) are a type of Large Language Model (LLM), also called foundation model. The technology was popularized series of deep learning based language models built by the OpenAI team. These models are known for producing human-like text in numerous situations. However, they have limitations, such as a lack of logical understanding and hallucinations, which limits their commercial functionality.

In this article, we describe GPT model GPT-3’s functioning mechanism, importance, use cases, and challenges to inform managers about this valuable technology.

What is GPT-3?

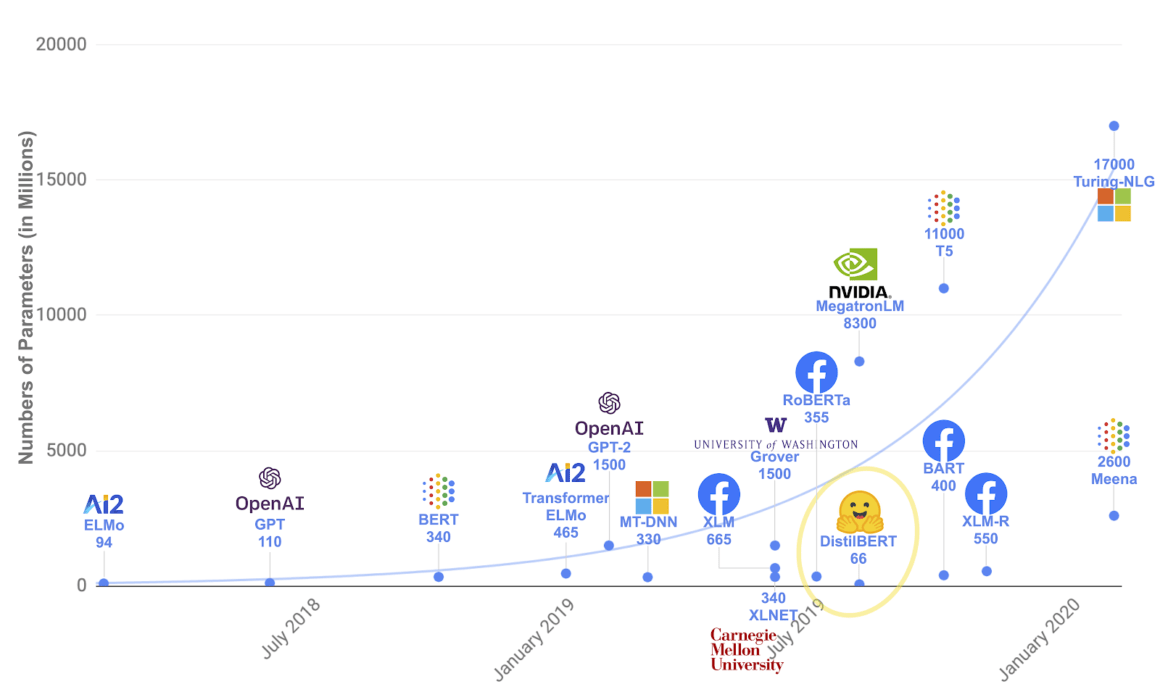

OpenAI researchers created more complex models that produced more human-like speech by developing GPT-1, GPT-2 and GPT-3. They trained these increasingly complex models on an increasingly large text corpus.With GPT-1, GPT-2 and other models in the field, the number of parameters increased rapidly over time (Figure 1). By 2020, GPT-3 model complexity reached 175 billion parameters, dwarfing its competitors in comparison (Figure 1).

Figure 1. DistilBERT market presence.

How does it work?

GPT-3 is a pre-trained NLP system that was fed with a 500 billion token training dataset including Wikipedia and Common Crawl, which crawls most internet pages.

It is claimed that GPT-3 does not require domain specific training thanks to the comprehensiveness of its training dataset.

Why does it matter?

GPT-3 has potential to automate tasks that require language understanding and technical sophistication.

Examples show that it can interpret complex documents, launch actions, create alerts or generate code. Application areas include technology, customer service (e.g. assisting with customer queries), marketing (e.g. writing attractive copy) or sales (e.g. communicating with potential customers).

Is it free?

GPT-3, is closed source, as Microsoft has licensed its exclusive use. Therefore, it is not free. This was widely criticized in the tech community as OpenAI, with its mission to benefit all humanity, has chosen to work exclusively for the benefit of one of the largest tech companies.

What are its use cases?

GPT-3 is not commonly used in production. Below you can see some demonstrations of its capabilities:

1. Coding

There are numerous online demos where users demonstrated GPT-3’s abilities to turn human language instructions into code. However, please note that none of these are robust , production ready systems. They are based on online demonstrations of GPT-3’s potential:

Python

GPT-3 has been able to code basic tasks in Python.

SQL

GPT-3 can automatically generate SQL statements from simple text descriptions without the assistance of human operators.

2. Machine Learning/Deep Learning Frameworks

GPT-3 has been used to build code for machine learning and deep learning frameworks like Keras.

3. DevOps Services

It has been used to create, delete or list DevOps services on the cloud. If it could work in a robust, predictable way, it would be able to automate the management of these services.

4. Front end design

It can generate website layouts according to user specifications, using CSS or JSX.

5. Chatbots

GPT-3 is capable of performing human-like conversations. Although it still has major areas of improvement as discussed in the ‘What are its limitations?’ section, it has the potential to improve today’s chatbots.

Without the need of case specific pre-training; it is able to translate, answer abstract questions, and act as a search engine with exact answers including source links.

6. Auto-Completion

GPT-3 was built for auto completion and is the most human-like system for that as explained by the IDEO team who used it as a brainstorming partner.

What are its limitations?

- The source code remains private

- Users can only experiment using a black-box API

- Only authorized users have access to the system and some famous researchers were not provided initial access to the tool.

However, it is obvious that GPT-3 is far from Artificial General Intelligence (AGI). It is capable of understanding the structure of language and putting words in a correct, natural sounding sequence which is a big feat. However, it lacks an understanding of word meaning and therefore can generate meaningless or wrong statements.

To learn more about chatbot failures you can read our top 9 chatbot failures article.

The latest updates in GPT-3 technology

ChatGPT

ChatGPT is a variant of the GPT-3 language model that has been specifically designed for dialogue generation. Its initial release uses the Legacy GPT-3.5 model, and this is still the free version available for all users.

Since its release on November 30, 2022, ChatGPT has stormed the search results. It hit the 1 million users limit just in 5 days (See the figure below). Together with its fame, it also brought concerns from a diverse group of professionals such as teachers, coders etc. For example, teachers fear that students will use the chatbot for writing excellent papers, or coders fear that it will replace them with its coding performance.1

Differences from GPT-3

One of the key differences between ChatGPT and GPT-3 is that ChatGPT has been trained on a large dataset of conversational data. This allows it to generate text that is more suited to chatbot conversations, as it has learned the patterns and conventions of human conversation. In contrast, GPT-3 is a general-purpose language model that has not been specifically trained for dialogue generation.

Another difference between the two models is their size. ChatGPT is a smaller model than GPT-3, with a smaller number of parameters. This makes it faster and more efficient to use, which is important for applications like chatbots that need to respond to user input in real time.

Overall, ChatGPT is a specialized version of GPT-3, that is specifically designed for generating natural language text for chatbot conversations. It has been trained on conversational data, and is a smaller and more efficient model than GPT-3.

Training Method

To optimize the language model, Reinforcement Learning from Human Feedback (RLHF) was used.2 The technique involves using human AI trainers to simulate conversations, while being given access to output suggestions generated by the model itself to help construct their responses.

Secondly, a technique for creating a reward model (RM) for reinforcement learning was used. The technique involves collecting comparison data from conversations between AI trainers and the chatbot, and using this data to rank the quality of different model responses. This data is then used to fine-tune the model using Proximal Policy Optimization (PPO). Then, the process is repeated several times to improve the model. You can see these steps in the figure below.

Limitations

The program has some limitations, such as an outdated knowledge base that only goes up until 2021, a tendency to provide incorrect answers, and a tendency to use the same phrases repeatedly. Additionally, the program may sometimes claim that it cannot answer a question when given one version of it, but be able to answer a slightly modified version of the same question.

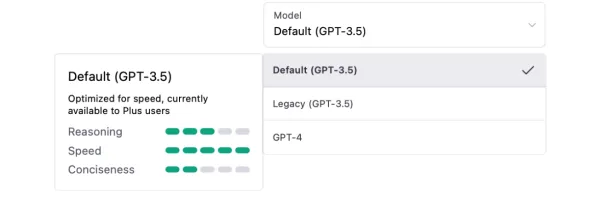

Default GPT-3.5

Default GPT-3.5 is a pro version of the legacy model, released at the beginning of March 2023. This was the paid previous version before GPT4. According to OpenAI, this version has better conciseness and is faster than the legacy version. However, there is no qualitative difference between the reasoning capabilities of the two versions.

The latest GPT model: GPT-4

Generative pre-trained transformer 4 (GPT4) is OpenAI‘s latest language model under GPT series, released on March 14, 2023. Microsoft has confirmed that certain versions of Bing that utilize GPT technology were utilizing GPT-4 prior to its official release. 3

The training of GPT-4

In the training of GPT-4, reinforcement learning with human feedback (RLHF) was used. OpenAI utilized feedback from human sources, including human feedback provided by users of ChatGPT, to enhance the performance of GPT-4. They also collaborated with more than 50 specialists to obtain initial feedback in various areas, such as AI safety and security.

The distinctive features of GPT-4

Visual input option

Although it cannot generate images as outputs, GPT-4 can understand and analyze image inputs.

Higher word limit

GPT-4 can process more than 25,000 words of text, which was below 3,000 words in earlier models.

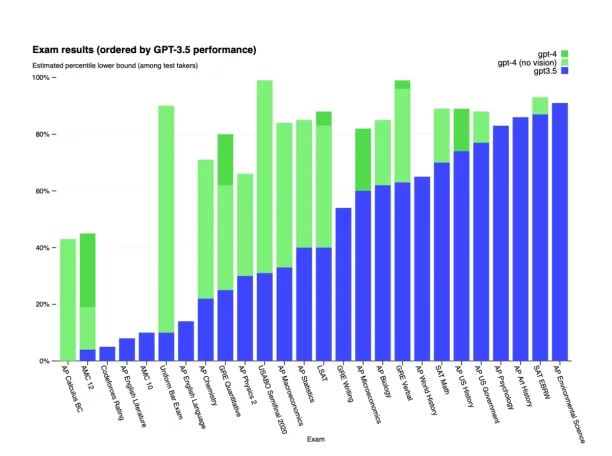

Advanced reasoning capability

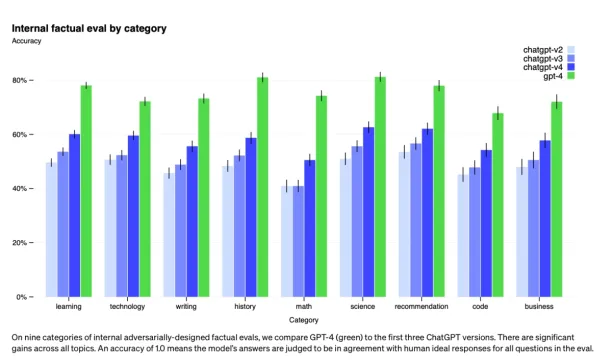

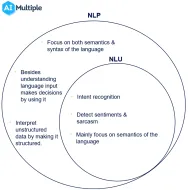

GPT-4 is outstanding compared to the earlier versions with its natural language understanding (NLU) capabilities and problem solving abilities. The difference may not be observable with a superficial trial, but the test and benchmark results show that it is superior to others in terms of more complex tasks.

Advanced creativity

As a result of its higher language capabilities, GPT-4 is advanced in creativity compared to earlier models.

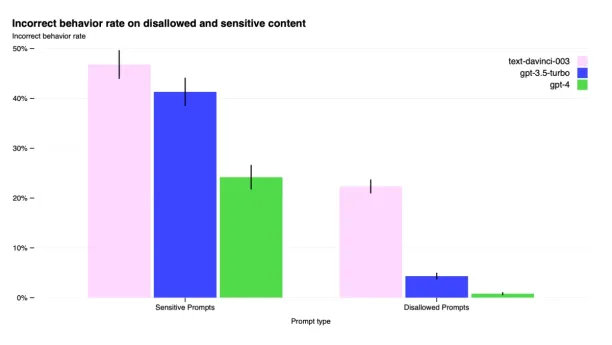

Adjustment for inappropriate requests

ChatGPT was criticized for its handicap in terms of providing answers to inappropriate requests such as explaining how to make bombs at home, etc. OpenAI was working on this problem, and made some adjustments to prevent the language models from producing such content. According to OpenAI, GPT-4 is 82% less likely to respond to requests for disallowed and sensitive content.

Increase in fact-based responses

Another limitation of the earlier GPT models was that their responses were not factually correct for a substantive number of cases. OpenAI announces that GPT-4 is 40% more likely to produce factual responses than GPT-3.5.

Steerability

“Steerability” is a concept in AI that refers to its ability to modify its behavior as required. GPT-4 incorporates steerability more seamlessly than GPT-3.5, allowing users to modify the default ChatGPT personality (including its verbosity, tone, and style) to better align with their specific requirements.

For more detail on GPT-4, you can check our in-depth GPT-4 article.

What is next for GPT models?

GPT-3 stands as a state-of-art NLP system, in terms of its scale of training data and processing capability. Elon Musk stated: “The rate of improvement from the original GPT to GPT-3 is impressive. If this rate of improvement continues, GPT-5 or 6 could be indistinguishable from the smartest humans”.

We find that optimistic.

- GPT-3 took tens/hundreds of millions to build.

- A training run is estimated to cost $4.6 million and it takes numerous training runs to fine tune the training process.

- This is just compute cost which tends to be a fraction of overall costs. Wages of a large team of researchers (31 researchers co-authored the article) and the supporting engineering team would be another large cost item.

- GPT-3 is ±10x larger than the largest NLP model built to date.

- To achieve a similar improvement, researchers would need to put hundreds of millions or possibly billions into the project. This is not practical for a research project with limited commercial application.

- Though OpenAI achieved significant progress in creating human-like language, almost no progress has been made to create a model with logical reasoning capabilities. And it is very hard for commercial applications (outside of the sphere of entertainment or human augmentation), to rely on a model with no logical reasoning.

For more on how AI is changing the world, you can

- Check out our articles on AI, AI technologies and AI applications in marketing, sales, customer service, IT, data or analytics

- Follow us on Linkedin or Twitter for updates on the state of AI.

And if you have a business problem that is not addressed here:

Sources:

External Links

- 1. “AI bot ChatGPT stuns academics with essay-writing skills and usability.” The Guardian, 4 December 2022, https://www.theguardian.com/technology/2022/dec/04/ai-bot-chatgpt-stuns-academics-with-essay-writing-skills-and-usability. Accessed 12 December 2022.

- 2. “ChatGPT: Optimizing Language Models for Dialogue.” OpenAI, 30 November 2022, https://openai.com/blog/chatgpt/. Accessed 12 December 2022.

- 3. “Confirmed: the new Bing runs on OpenAI’s GPT-4.” Bing Blogs, 14 March 2023, https://blogs.bing.com/search/march_2023/Confirmed-the-new-Bing-runs-on-OpenAI%E2%80%99s-GPT-4/. Accessed 27 March 2023.

Cem has been the principal analyst at AIMultiple since 2017. AIMultiple informs hundreds of thousands of businesses (as per similarWeb) including 60% of Fortune 500 every month.

Cem's work has been cited by leading global publications including Business Insider, Forbes, Washington Post, global firms like Deloitte, HPE, NGOs like World Economic Forum and supranational organizations like European Commission. You can see more reputable companies and media that referenced AIMultiple.

Throughout his career, Cem served as a tech consultant, tech buyer and tech entrepreneur. He advised businesses on their enterprise software, automation, cloud, AI / ML and other technology related decisions at McKinsey & Company and Altman Solon for more than a decade. He also published a McKinsey report on digitalization.

He led technology strategy and procurement of a telco while reporting to the CEO. He has also led commercial growth of deep tech company Hypatos that reached a 7 digit annual recurring revenue and a 9 digit valuation from 0 within 2 years. Cem's work in Hypatos was covered by leading technology publications like TechCrunch and Business Insider.

Cem regularly speaks at international technology conferences. He graduated from Bogazici University as a computer engineer and holds an MBA from Columbia Business School.

To stay up-to-date on B2B tech & accelerate your enterprise:

Follow on

Comments

Your email address will not be published. All fields are required.