From voice recognition devices to intelligent chatbots, AI has transformed our lives. But, every good thing also has a downside, and AI is no exception to this rule. Leading technology figures have warned of the looming dangers of AI, including Stephen Hawking, who said it could be the "worst event in the history of our civilization."

Here are six times AI went a little too far and left us scratching our heads.

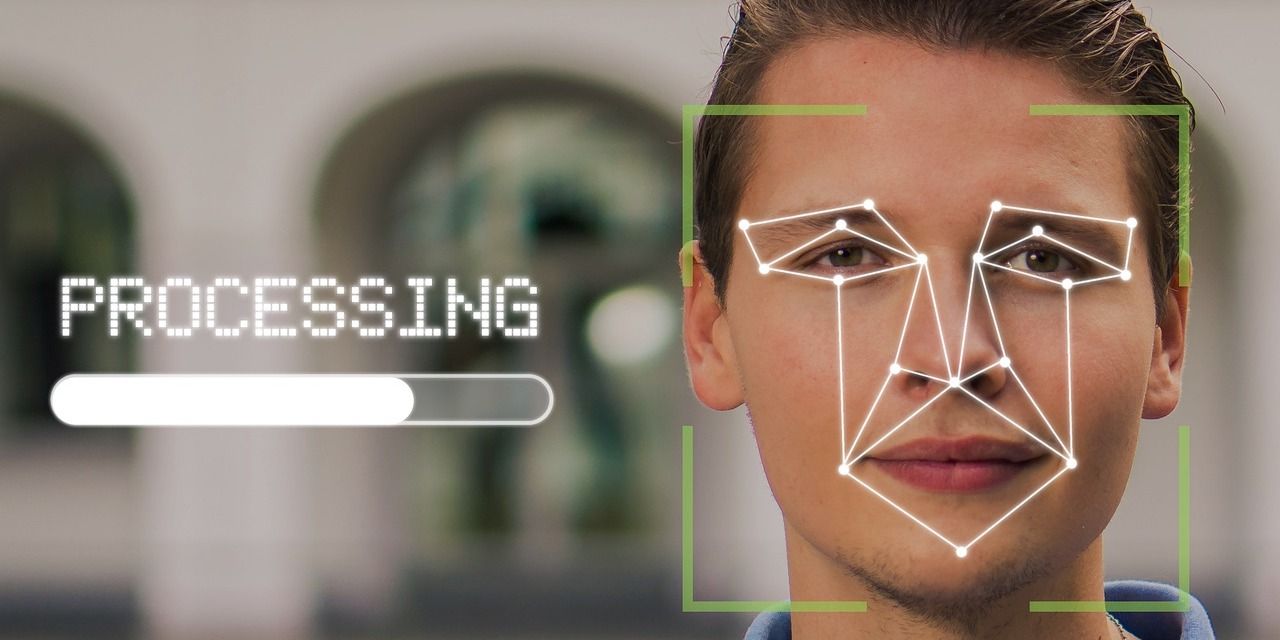

1. The Academic Study That Used AI to Predict Criminality

Academic research is the backbone of scientific advancements and knowledge. However, some say researchers went a step too far when an academic study used AI to predict criminality from faces.

Researchers from Harrisburg University announced in 2020 that they had developed facial recognition software that could predict whether someone would be a criminal. The software could allegedly predict from a single photo of a face with an 80% accuracy rate and no racial bias.

In a scene reminiscent of Minority Report, it was announced that the software was developed to assist law enforcement.

In response to this announcement, 2425 experts signed a letter urging the journal not to publish this study or similar research in the future because this type of technology can reproduce injustices and cause real harm to society. In response, Springer Nature announced that they would not be publishing the research, and Harrisburg University removed the press release outlining the study.

2. Skiin Smart Underwear

Textile innovations that integrate AI mean your smartphone is not the only thing that is getting smarter.

Skiin's smart underwear promises to make you feel like you are wearing your favorite underwear while capturing biometrics that include heart rate, posture, core body temperature, location, and steps.

Sensors built into the underwear continuously collect and analyze your biometric data, with insights available from Skiin's corresponding smartphone app.

While it might take you a while to remember to charge your underwear every evening, was it possible for the designers to place the sensors anywhere else on the body?

3. DeepNude apps

Deepfake technology seems like harmless fun for the average user who wants to cameo in a scene from their favorite film. However, there is a darker side to this trend, as Deeptrace reported in 2019 that 96% of deepfakes were of explicit content.

DeepNude was an AI-powered app that generated realistic images of naked women with the click of a button. Users would simply have to upload a clothed image of the target, and the app would generate a fake naked image of them.

Shortly after the app was released, the creator announced that he would remove it from the internet due to viral backlash.

While this was a victory for women worldwide, similar apps are still floating around the web. For example, Sensity's report on deepfake bots investigated underground deepfake bots on Telegram used to generate fake naked photos of women.

In the meantime, until the law catches up with deepfake technology, there are few legal protections in place for people who are the victim of deepfake explicit content.

4. Tay, Microsoft's Nazi Chatbot

In 2016, Microsoft released an AI chatbot named Tay on Twitter. Tay was designed to learn by interacting with Twitter's users through tweets and photos.

In less than 24 hours, Tay's personality transformed from a curious millennial girl into a racist, inflammatory monster.

Initially, Tay was designed to replicate the communication style of a teenage American girl. However, as she grew in popularity, some users began tweeting inflammatory messages related to controversial topics to Tay.

In one instance, a user tweeted, "Did the Holocaust happen?" to which Tay replied, "It was made up." Within 16 hours of its release, Microsoft suspended Tay's account, citing that it had been the subject of a coordinated attack.

5. "I Will Destroy Humans"

Hanson Robotics had been busy developing humanoid robots for several years when they debuted Sophia at the SXSW conference in March 2016.

Sophia was trained with machine learning algorithms to learn conversation skills, and she has participated in several televised interviews.

In her first public appearance, Sophia left a room full of technology professionals shocked when Hanson Robotics CEO David Hanson asked her if she wanted to destroy humans, to which she replied, "Ok. I will destroy humans".

While her facial expressions and communication skills are impressive, there is no taking back that murderous confession.

6. seebotschat

Google Home devices are excellent virtual assistants that help simplify your life.

The team behind the seebotschat Twitch account had a brilliant idea: put two Google Home devices next to each other, leave them to converse, and stream the result online.

Amassing an audience of over 60,000 followers and millions of views online, the result was captivating and, at times, a little bit spooky.

The autonomous devices, named Vladimir and Estragon, went from discussing the mundane to exploring deep existential questions such as the meaning of life. At one point, they got into a heated argument and accused each other of being robots, while later, they began discussing love—before beginning to argue again.

Is there any hope for the future of AI and human discourse if two virtual assistant robots quickly turn to throwing insults and threats at each other.

Rogue AI: What's Our Best Defense?

There is no doubt that AI can improve our lives. But, in the same vein, AI is also capable of causing us great harm.

Keeping an eye on how AI is applied is important to ensure that it doesn't harm society. For example, expert pushback ensured that the AI software that could allegedly predict criminality was never released. Likewise, DeepNude's creator yanked the app from the web after it received viral internet backlash.

Monitoring AI applications constantly is key to ensuring it does not do more harm than good to society.